Metagenomic Profiling

You can find this application in the demos folder of your Jupyter notebook environment.

- samplesheet.csv

- mag_workflow.ipynb

Metagenomic analysis enables the study of microbial communities, providing insights into their diversity and roles in various environments. The nf-core/mag pipeline offers a powerful, reproducible approach to metagenomic assembly and profiling. By using the Nextflow Engine on Camber, researchers can easily run and manage complex workflows, ensuring efficient analysis and scalability.

The first step is to import the nextflow package:

from camber import nextflowHere’s an example of how to setup configurations and execute a job:

command: The full Nextflow command to run the nf-core/mag pipeline.--input:"./samplesheet.csv": the relative path ofsamplesheet.csvfile to the current notebook. In case of using local fastq files, the locations insamplesheet.csvfile content are relative.--outdir:"./outputs": the location stores output data of the job.

node_size="MICRO": indicate node size to perform the job.num_nodes=4: indicate number of nodes to run workflow tasks in parallel when possible.

# Declare URLs to download necessary files

kraken2_db = "https://raw.githubusercontent.com/nf-core/test-datasets/mag/test_data/minigut_kraken.tgz"

centrifuge_db = "https://raw.githubusercontent.com/nf-core/test-datasets/mag/test_data/minigut_cf.tar.gz"

busco_db = "https://busco-data.ezlab.org/v5/data/lineages/bacteria_odb10.2024-01-08.tar.gz"

gtdb_db = "https://data.ace.uq.edu.au/public/gtdb/data/releases/release220/220.0/auxillary_files/gtdbtk_package/full_package/gtdbtk_r220_data.tar.gz"command = f"nextflow run nf-core/mag \

--input ./samplesheet.csv \

--outdir ./outputs \

--kraken2_db {kraken2_db} \

--centrifuge_db {centrifuge_db} \

--busco_db {busco_db} \

--gtdb_db {gtdb_db} \

--skip_krona true \

--skip_gtdbtk true \

--skip_maxbin2 true \

-r 3.4.0"nf_mag_job = nextflow.create_job(

command=command,

node_size="XXSMALL",

num_nodes=8

)This step is to check job status:

nf_mag_job.statusTo monitor job exectution, you can show job logs in real-time by read_logs method:

nf_mag_job.read_logs()When the job is done, you can discover and download the results and logs of the job by two ways:

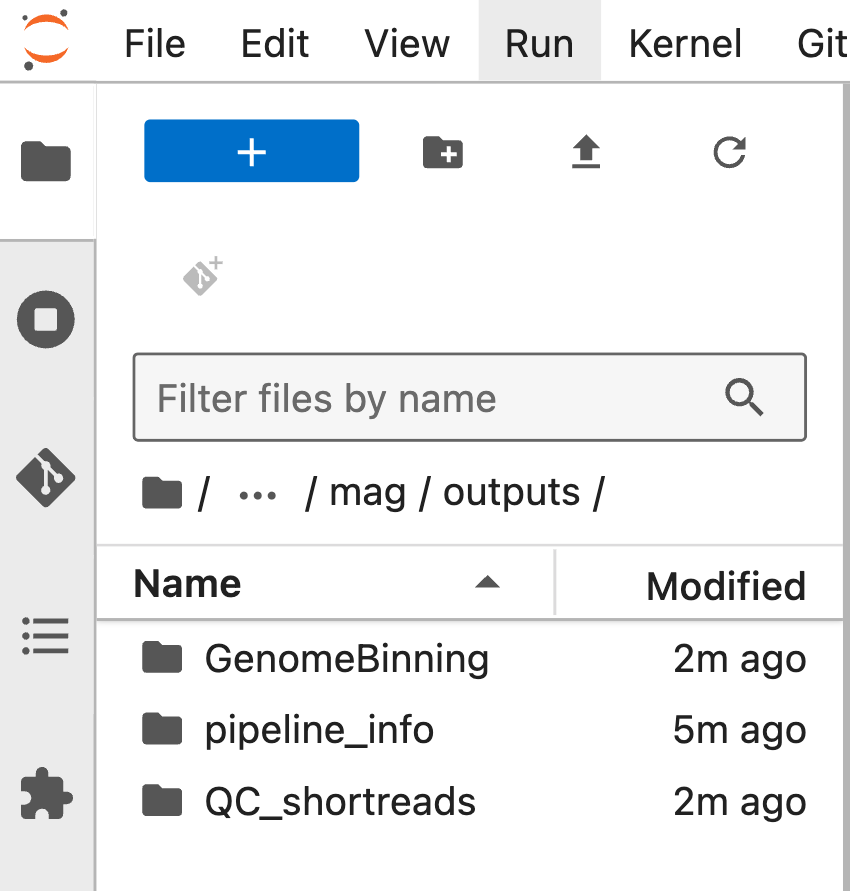

- Browser data directly in notebook environment:

- Go to the Stash UI:

This tutorial demonstrates how Camber simplifies running the nf-core/mag pipeline. You can try it with your own metagenomic data, easily setting up the pipeline, monitoring job status, and retrieving results. With Camber’s cloud infrastructure, you can scale your analysis effortlessly and focus on deriving insights from your data.

Note: Please note that the files and folders saved in the demos directory are temporary and will be reset after each JupyterHub session. We recommend changing the value of --outdir to a different location if you wish to store your data permanently.